The news has been full of leaked passwords for some popular services recently. We can see very big numbers being tossed around casually, both in accounts breached and potential dollar losses. But researchers like Brian Krebs (Link, Link) have noted that these numbers can be exaggerated for effect, and sometimes blatantly wrong.

So where exactly do these numbers come from? And how does the security industry validate that a breach has occurred at all? (If they choose to validate their information prior to release, which is another blog entirely. ) Lets take a look.

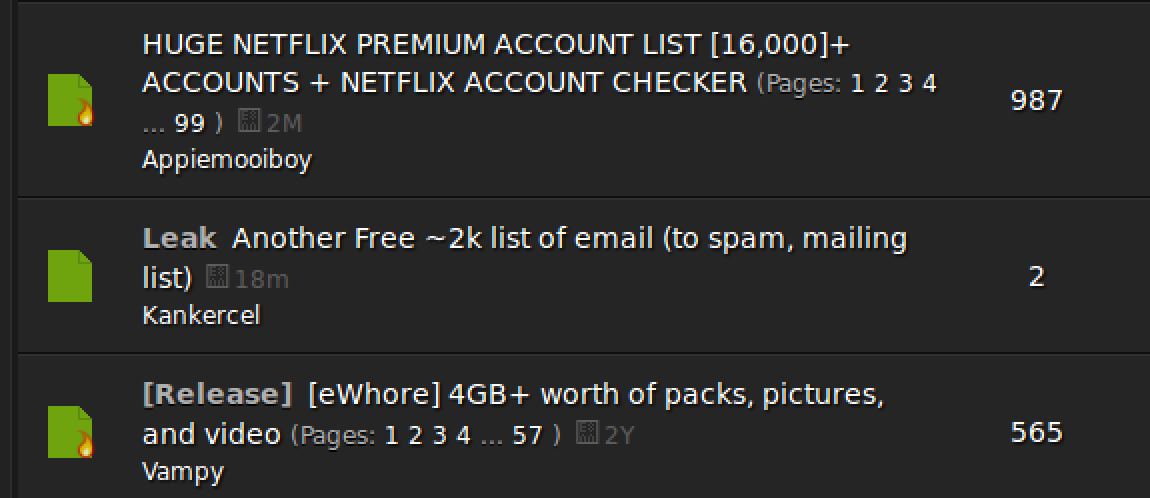

The first thing a hacker has to do is advertise. This can be on a forum, paste site, or even Twitter.

Lets assume our presumptive hacker is holding a trove of account information from a given forum. Most frequently this will include an account name, a login email, and a hashed password. Quite often there are more data fields associated with a given account, but since name, login email, and password are the bare minimum required for an account to work, these are the fields that must be true for the dump to have any utility.

I’m defining utility here as the ability for a malicious actor to exploit a compromised account successfully. Because trust is not easy to come by in the criminal underground, advertisement for password dumps can come with sample entries for buyers to verify prior to purchase.

This builds a bit of trust for the commercial transaction, but it also tips off a third-party that a dump exists, and a breach has possibly occurred. Security researchers will monitor these dump release announcements so they can let impacted parties know of the risk as soon as possible.

Sometimes folks will stop here. The dump is discovered, a threat actor has claimed responsibility, and user accounts are up for sale? Why not be the first to publish and help keep people safe? Well, because the criminal underground loves scamming each other almost as much as scamming you and me.

Dumps will be padded out with old or incorrect information to make a more lucrative product. Occasionally dumps will be repackaged wholesale to appear as a brand new breach, when there’s been no breach at all.

Even with real dumps containing real credentials, the ratio of padding to info can frequently reach 90 to 10 percent. Sending out an alarm incurs costs on its own, especially in an large enterprise environment where ensuring compliance with password policies is a non-trivial affair.

We as defenders have an obligation not to cause harm ourselves, so it’s imperative to do a little bit of legwork before telling everyone to panic. So where do we start?

0.5 – The gut check, or the Relative Bogosity Level. Does the span of account ages map to a plausible range for the web service in question? Are the passwords plausible? Are there too many or too few passwords of an expected type? Are there fragmented strings in the password field that look like a botched copy and paste job? Does the web service in question allow you to create new accounts with selected emails from the dump? Any suspected bogosity going in requires stricter scrutiny as we proceed.

1 – has the breached company themselves validated the dump in some way? This seems a touch obvious, but often times a company will release limited information validating a breach. While a company’s lawyers tend to forbid employees from saying anything substantive during an ongoing investigation, even small statements like “detected unauthorized access”, “Unforseen outage”, or “3rd party infrastructure issues” can suggest we can look at a subsequently discovered dump and take it more seriously than we would otherwise.

2 – is this new information? If the accounts can be found packaged with other, previous dumps, then the odds of dump bogosity rise accordingly. If I as the researcher can find a dump sample via google in a page published in 2012, then so could a scammer trying to sell worthless goods. The catch here is that almost all dumps include repackaged or otherwise irrelevant information, and some can be prohibitively large. We can get around this by taking a representative, small sample of the data, and applying insights gained to the entire package.

3 – how would you characterize the source? Is it a closed forum noted for previous high value dumps? Or was it a public pastebin link tweeted by a throwaway account? Sucessful, sustainable, and damaging criminal activity almost always requires supportive infrastructure and community, which makes some sources more likely for validated dumps than others. Further, is there an individual personality associated with the dump? Someone with a long, storied history of posturing on the Internet is most likely not a sophisticated hacker seeking to cash in on valuable data. What sort of claims is the hacker making about the data? Do they seem plausible? Would obtaining the data require special conditions like insider access?

4 – If someone else is reporting discovery of a dump, what’s their source? Much like middle school math, it is good manners to show your work. (Sidebar: some security firms will borrow phrasing from US government intelligence and say they cannot do this to “protect sources and methods.” Nonsense. Nobody’s life is at immanent risk when acquiring the data, and if you are not comfortable referring to a masked source, you most likely had no source at all. This goes triple for any “source” that is actually a download link to a PDF requiring an email to view.) What sort of vetting did the secondary source conduct? Again, they should tell you. Does the secondary source have a history of doomsaying or publishing content that seems lifted from the marketing department? A quality secondary source publishes analysis that is calm and measured about the scope of a threat, and most importantly *searches for competing hypotheses.* While there’s a lot of jargon out there about that last phrase, what it boils down to is quality analysis seeks to provide all plausible ways of looking at events, rather than the most frightening. Phrases that may indicate your secondary source is not doing serious analysis can include “Cyber Pompei”, “Sophisticated cyber attack”, or “Cyber Pearl Harbor.”

One commonality with all these steps is uncertainty. All four steps could indicate the data at hand has high bogosity, but that still doesn’t preclude the possibility of a breach. Further, password reuse makes it possible for the same account to be breached multiple times over a period of years.

Lastly, many of these criteria are analytic judgement calls rather than hard binaries. Again, like middle school math, it’s okay to be wrong if you show your work and made structurally sound inferences.

Ultimately, while we’d all like to be right 100 percent of the time, laying out sources, analyses, and conclusions in a structured, orderly way can provide other researchers a foundation to build upon and allows us all to improve together. It is always acceptable to be wrong, provided you are wrong for the right reasons. Should we execute a vetting process without a 100 percent definitive conclusion, the solution is to publish the source with its Relative Bogosity Level, and the analytic assumptions made, and let the reader decide for themselves.

Analysis of unreliable data is at its core providing context to inform a reader’s good judgement and assist in measured risk analysis, and as such, we have a responsibility not to gloss over unresolved problems when they exist.

For a more technical, detailed look at data validation, check out Alison Dixon’s paper at krebsonsecurity.com/wp-content/uploads/2014/10/vetting_leaks_final.pdf

For more on how to protect your accounts Wendy has a great post on security basics here: Link